Sound synthesis is to generate a signal with desired acoustical effects and can be

useful in sound design. Some common techniques include additive synthesis, granular

synthesis, and physical modelling. This project aims to synthesize the handpan sound

using modal synthesis. The model was devised by extracting the frequency peaks and

bandwidths of the sound source. Thirteen recorded samples of the impulse response

of each note were analyzed and recreated with resonance filters designed in MATLAB,

and a song was played with realistic synthesized sound for demonstration.

Introduction

Physical model synthesis is a technique to simulate the vibrations of objects under various excitations. It requires a description of the vibrational characteristics and the coupling interactions of the physical structures. Modal synthesis can be seen as a special physical modelling technique in which the vibrations of resonant structures are simulated based on a modal representation, and the interactions between structures are calculated by the time step of the corresponding equations [1].

Modal Synthesis

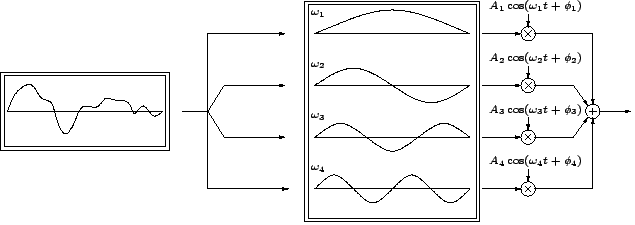

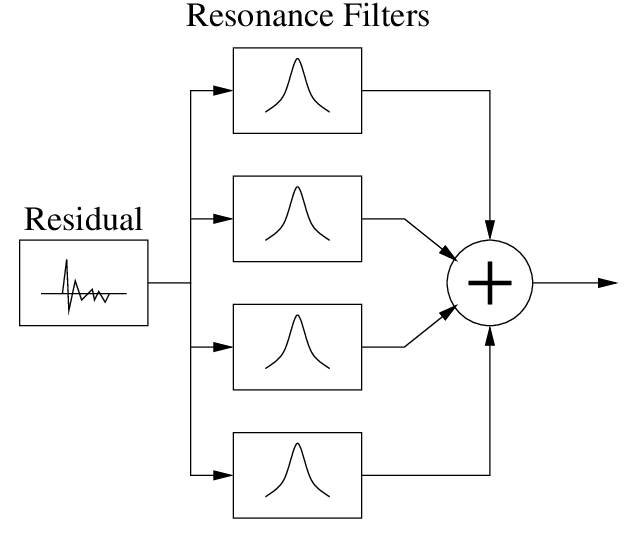

Modal synthesis is a technique to perform vibration analysis of a system by determining its mode shapes and frequencies. For example, a signal shown in Figure 1 can be seen as a superposition of various modes; each has a particular frequency [2]. It can be re-assembled by combining such frequencies and selected modal information. Modal synthesis is “both effective and efficient for recreating the sounds of objects that exhibit a relatively small number of strong decaying modes” [3]. Some good candidates for such technique are plucked strings, a marimba struck by a mallet or a bar hit by a hammer. It can also use filter banks (i.e. a lot of filters) to simulate all the resonant modes of the system, as illustrated in Figure 2 [4]. Resonance filters can approximate the spectral and temporal profile of the sound: the radius of the filter, which is related to its bandwidth, gives insights into the decay time.

Handpan

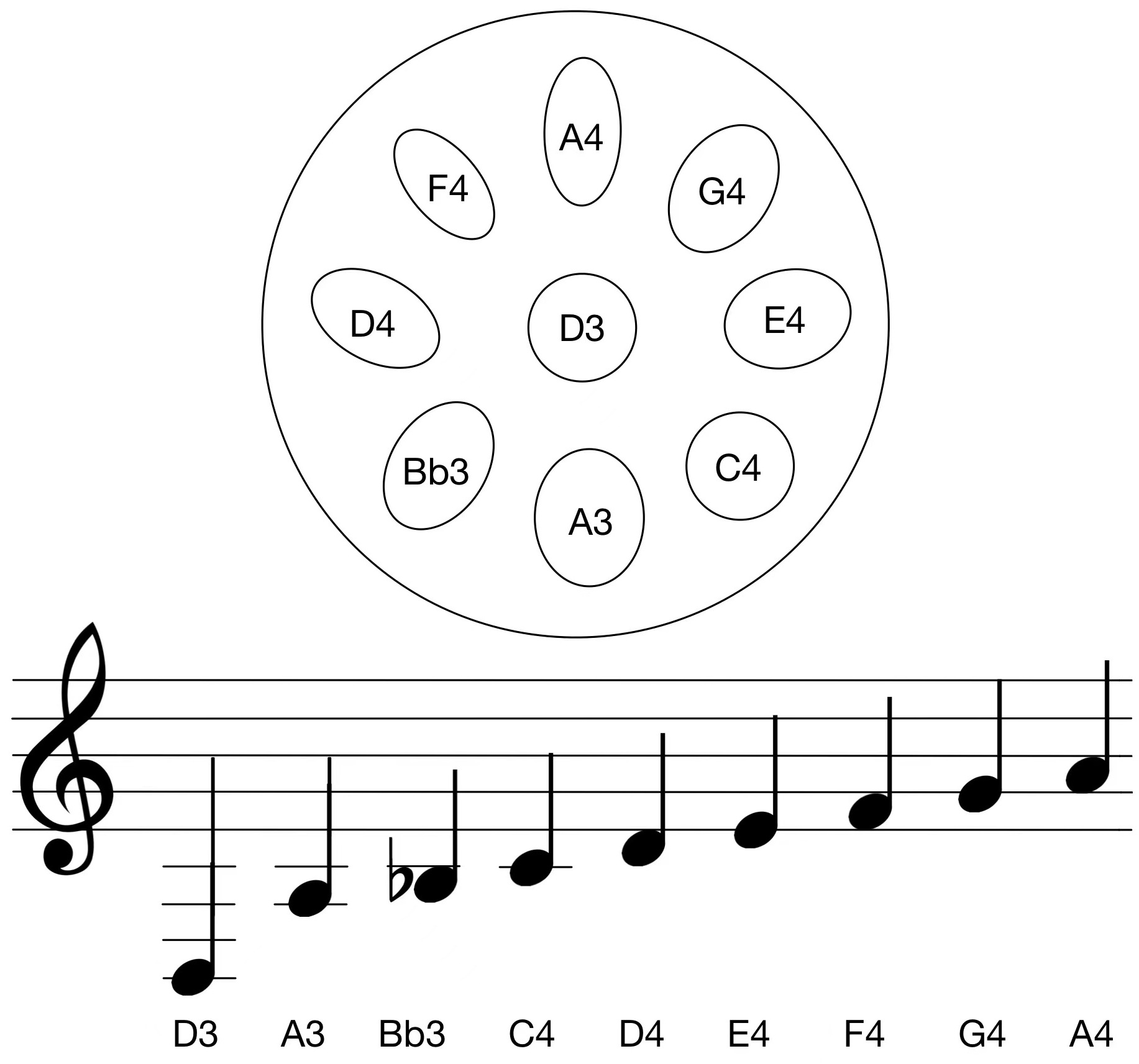

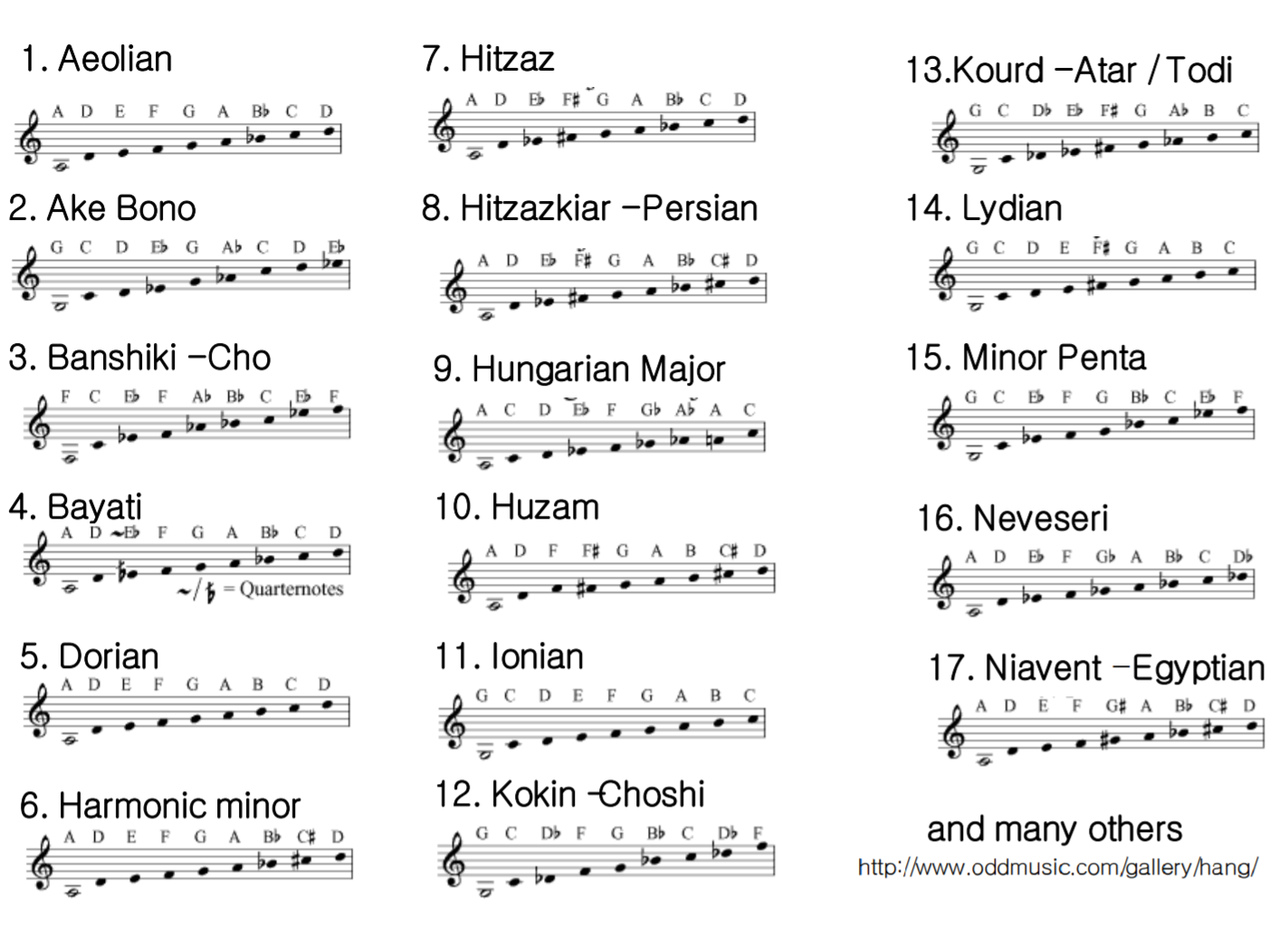

A handpan instrument is a group of metallic percussion instruments played with hands that are similar to Hang® (created by PANArt tuners Sabina Schärer and Felix Rohner in 2000 in Switzerland [5]), consisting of two hemispherical shells of nitrided or stainless steel. Its extraordinary sound is often described as calming and peaceful, which can be used for stress release [6]. Originated from the Caribbean steelpan instrument, a handpan typically has 8-9 notes on a pentatonic scale. Figure 3 is a sample image of a free integral Hang® built by PANArt, where seven harmonically tuned notes are around a deep central tone, and each excites the Helmholtz resonance in the body of the instrument [7] [8]. Figure 4 is a sample tuning scale commonly known as Kurd D, adapted from [9]. It can be tuned in a wide range of scales; some examples are illustrated in Figure 5 [10].

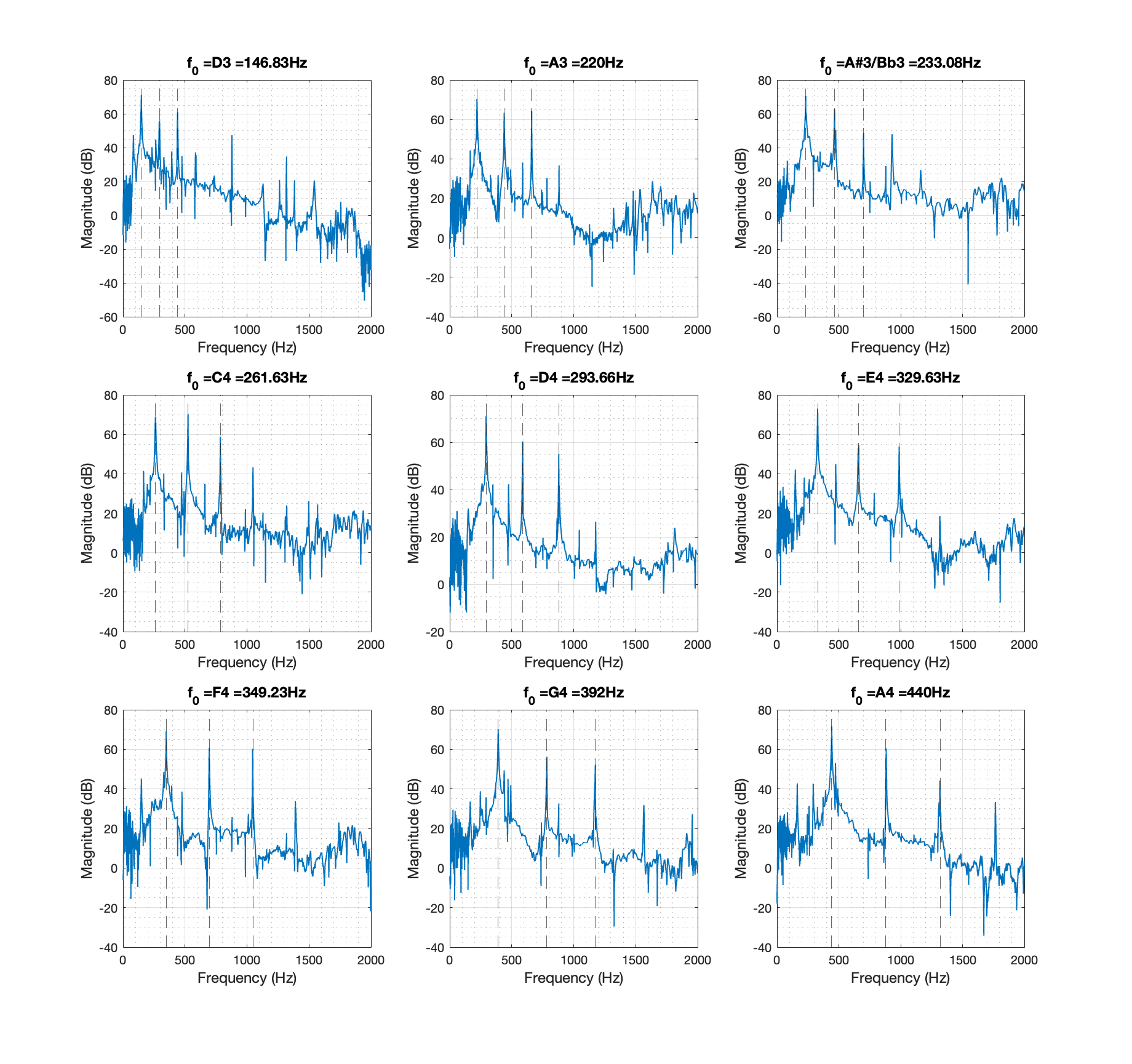

The playing technique of a handpan is to strike it with the hand, either using fingertips or the palm. Different manner can considerably influence the quality of the sounds: the spectral content, the decay time, and other features of the amplitude envelope depend on the intensity of the hit [9]. Tapping with a rubber mallet or just fingertips on a single note area can excite the fundamental, the second, and the third harmonics, as illustrated in Figure 6. The harmonic ratio of the most prominent spectral peaks is 1 : 2 : 3, and striking one note can also make other notes vibrate [11].

Methods

Since each note is impulsively excited, the handpan can be modelled with a bank of resonance filters, and each is centred at particular frequency components. To obtain the parameters (gains and decay times) for these filters, one can tap each note area slightly with one hand or a rubber mallet and record the impulse response. A set of recorded samples are stored on the Github repo [12]. Performing FFT (Fast Fourier Transform) on each sample and finding peaks of the spectra gives information on the central frequency and amplitude of each vibration mode. We can then estimate the peak bandwidth at a level of -3 dB to find the pole radius, which relates to the quality factor Q and the decay constant a. The feedback coefficients of a second-order IIR (infinite impulse response) filter can also be calculated to generate an impulse response. Finally, we can excite the impulse response with a residual obtained from the original signal. The detailed procedure with equations is listed below, and the MATLAB script is attached in Appendix A.

Resonance Filter Bank

In order to simulate a decaying waveform, a digital resonator centred at frequency f can be designed to create an impulse response. This was achieved using second-order IIR filters with feedback coefficients of a1 and a2, with the following specifications [4]:

- difference equation of a second-order filter: y[n] = b0 * x[n] + b1 * x[n - 1] + b2 * x[n - 2] - a1 * y[n - 1] - a2 * y[n - 2], n = 0, 1, 2, …,

- b0 = peak amplitude,

- a1 = -2 * r * cos(2 * pi * f * Ts), r = pole radius, Ts = sample period = 1/fs

- a2 = r^2,

- r = e^(-pi * B * Ts), B = 3dB-bandwidth [13].

Therefore, we need to extract each peak’s frequency and amplitude and find their 3-dB bandwidth.

Parabolic Interpolation of Spectral Peaks

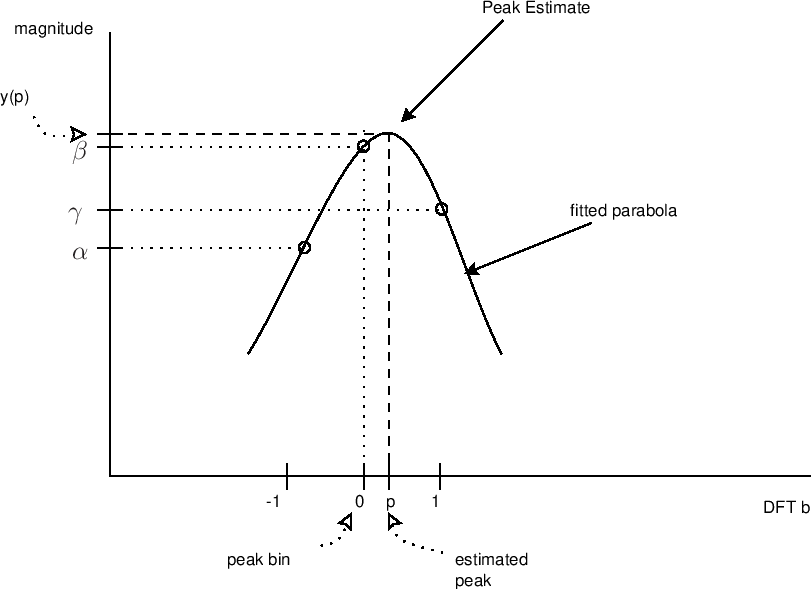

The frequency resolution of an N-point DFT (Discrete Fourier Transform) is fs/N Hz, where fs is the sampling frequency. Since the peak value is not necessary at the bin value, a parabola was fitted to the spectral data to estimate the peak and amplitude values based on the three samples nearest the peak, as illustrated in Figure 7 [14].

A parabola can be written as y(x) = a(x-p)^2 + b, in which p is the peak location in bins, b is the peak amplitude in dB, and a is the curvature. The peak location can be extracted from the three nearest samples y(-1) = alpha, y(0) = beta, and y(1) = gamma as: p = (0.5) * (alpha - gamma) / (alpha - 2 * beta + gamma) = [-1/2, 1/2]. Therefore, the estimated peak value y(p) is: y(p) = beta - 0.25 * (alpha - gamma) * p [14].

%% find peaks of the spectrum using parabolic interpolation

% ref: https://ccrma.stanford.edu/~jos/sasp/Quadratic_Interpolation_Spectral_Peaks.html

Y = abs(Y(1:ceil(N/2))); % keep half of fft

Xi = [];

threshold = 10^(40/20); % dB converted to linear value

for n = 2:length(Y)-2 % search for peak indices

if Y(n+1) <= Y(n) && Y(n-1) < Y(n) && Y(n) > threshold

Xi = [Xi, n];

end

end

Yi = zeros( size( Xi ) );

for n = 1:length(Xi)

alpha = Y(Xi(n)-1);

beta = Y(Xi(n));

gamma = Y(Xi(n)+1);

Xii = 0.5 * (alpha - gamma) / (alpha - 2 * beta + gamma);

% interpolated bin value (-1/2 to +1/2)

Yi(n) = beta - 0.25 * (alpha - gamma) * Xii;

% interpolated peak value

if Xii > 0

Xi(n) = f(Xi(n)) + Xii*(f(Xi(n)+1)-f(Xi(n))); % bin value in Hz

else

Xi(n) = f(Xi(n)) + Xii*(f(Xi(n))-f(Xi(n)-1)); % bin value in Hz

end

end

3dB-Bandwidth Estimation

After finding the frequency peaks, their 3dB-bandwidth can be calculated directly from the powerbw() function [15]. The periodogram power spectral density (PSD) was first estimated through the periodogram() function, and their 3dB-bandwidth was obtained in each peak by specifying the frequency range [16].

%% find -3dB Bandwidth

[Pxx,f2] = periodogram(y(sigIdx),rectwin(N),[],fs);

for i = 1:length(Xi)

figure(1)

xline(Xi(i))

fl = Xi(i)-10;

fr = Xi(i)+10;

bw(i) = powerbw(Pxx(f2>fl & f2 < fr), f2(f2>fl & f2 < fr));

end

bw;

factor = 0.6;

B = factor*bw;

Apply the Filters

Given all the parameters being estimated above, the impulse response of each filter can then be applied with the residual of the original signal to simulate a realistic sound.

%% IIR filter parameters

r = exp(-pi*B/fs);

b0 = Yi;

a1 = -2*r.*cos(2*pi*Xi/fs);

a2 = r.^2;

%% Create impulse response of filter bank

N = length(y);

x = [1; zeros(N-1, 1)];

y2 = filter( b0(1), [1 a1(1) a2(1)], x );

for n = 2:K

y2 = y2 + filter( b0(n), [1 a1(n) a2(n)], x );

end

y1 = y2 / max(abs(y2));

useResidual = true;

if useResidual

Y = fft(y);

Y2 = fft(y2);

R = Y ./Y2;

r = 50*real(ifft(R));

y2 = filter( b0(1), [1 a1(1) a2(1)], r );

for n = 2:K

y2 = y2 + filter( b0(n), [1 a1(n) a2(n)], r );

end

end

y2 = y2 / max(abs(y2));

Results

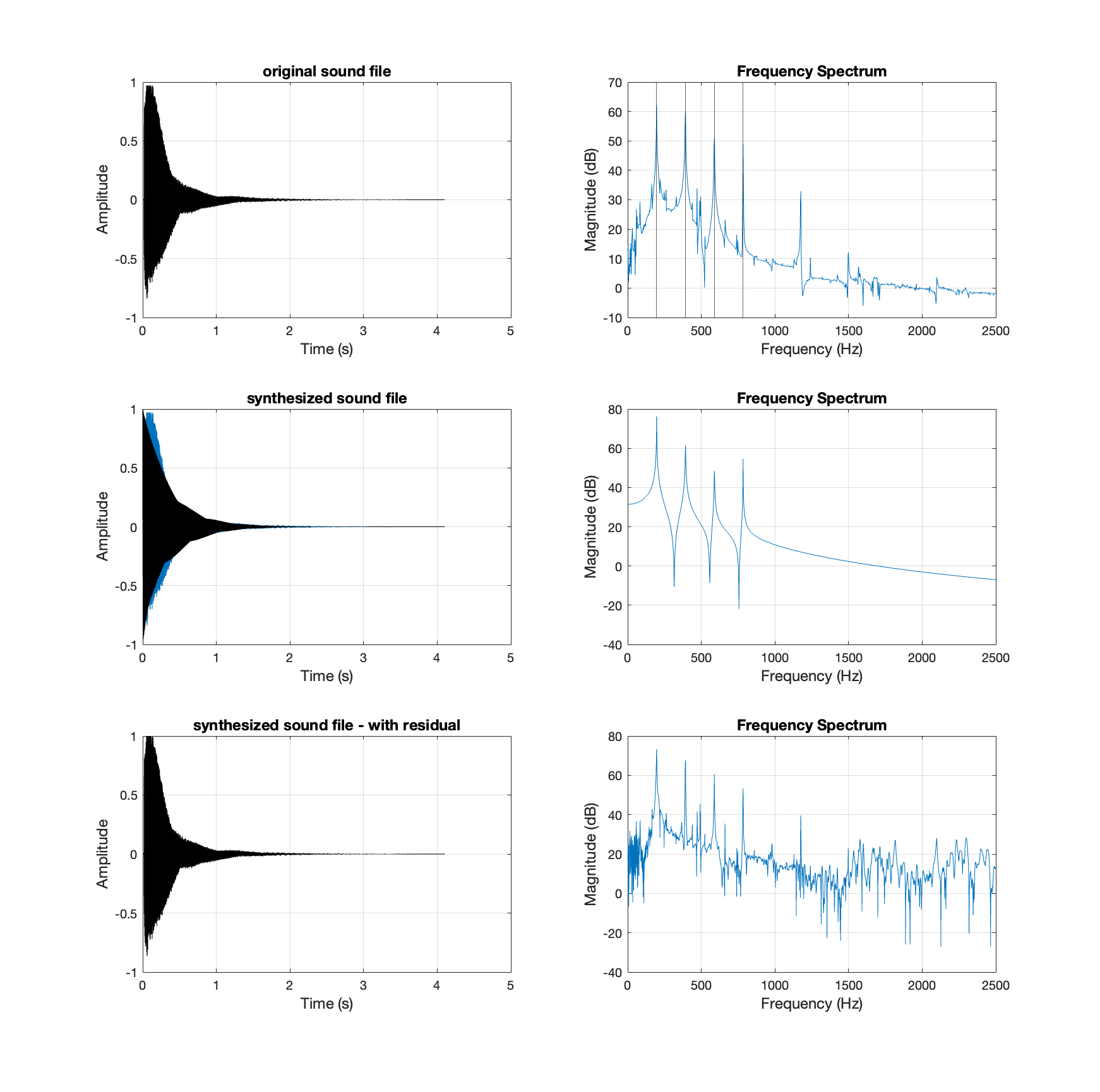

A total of 13 recorded samples were obtained and simulated via modal synthesis with resonance filters, as listed below (click the play button). In addition, the time and frequency domain of each signal was plotted, and an example of note G3 is illustrated in Figure 8.

The recorded sample of Note D3:

The impulse response of resonance filters of Note D3 (no residual):

The synthesized Note D3 excited by residual:

The recorded sample of Note G3:

The impulse response of resonance filters of Note G3 (no residual):

The synthesized Note G3 excited by residual:

The recorded sample of Note A3:

The impulse response of resonance filters of Note A3 (no residual):

The synthesized Note A3 excited by residual:

The recorded sample of Note Bb3:

The impulse response of resonance filters of Note Bb3 (no residual):

The synthesized Note Bb3 excited by residual:

The recorded sample of Note B3:

The impulse response of resonance filters of Note B3 (no residual):

The synthesized Note B3 excited by residual:

The recorded sample of Note C4:

The impulse response of resonance filters of Note C4 (no residual):

The synthesized Note C4 excited by residual:

The recorded sample of Note D4:

The impulse response of resonance filters of Note D4 (no residual):

The synthesized Note D4 excited by residual:

The recorded sample of Note E4:

The impulse response of resonance filters of Note E4 (no residual):

The synthesized Note E4 excited by residual:

The recorded sample of Note F4:

The impulse response of resonance filters of Note F4 (no residual):

The synthesized Note F4 excited by residual:

The recorded sample of Note Gb4:

The impulse response of resonance filters of Note Gb4 (no residual):

The synthesized Note Gb4 excited by residual:

The recorded sample of Note G4:

The impulse response of resonance filters of Note G4 (no residual):

The synthesized Note G4 excited by residual:

The recorded sample of Note A4:

The impulse response of resonance filters of Note A4 (no residual):

The synthesized Note A4 excited by residual:

The recorded sample of Note B4:

The impulse response of resonance filters of Note B4 (no residual):

The synthesized Note B4 excited by residual:

A song Yan Yu Qing Dai 烟雨青黛 written by Chinese musician Shi, Lei was also recreated with synthesized sounds:

Discussion

The synthesized handpan sound is very realistic, and one can barely distinguish it from the recorded sample. The frequency spectrum of each note was analyzed to find peaks in frequency and amplitude, and the bandwidth was successfully obtained from the MATLAB functions. Modal synthesis provides a simple and efficient solution to synthesize this type of metallic percussion instrument. So it can be applied to other impulsively excited objects. One of the challenges was to obtain the clean recorded samples of the impulse response of each note, as no handpan was available on hand. Most online recordings have multiple notes playing successively or simultaneously, or only one or two impulse responses from the same handpan were published. Some tapes are noisy, and the results would not be ideal as the noise could also be synthesized. Removing noise would lose some of the spectral content of the sound. Luckily, a set of relatively clean recorded samples was found on a GitHub repo.

If one wants to record the impulse response by themselves, the experimental set-up can be found in Eyal Alon’s master’s thesis, “Analysis and Synthesis of the Handpan Sound”, which details the recording procedure, analysis and synthesis of handpan sound.

Another challenge was to adjust the bandwidth. If one uses the bandwidth obtained directly from the powerbw() built-in function in MATLAB, the decay time would be too long or too short, resulting in an undesired sound. Therefore, a fudge factor was applied to the bandwidth to adjust the values. Each note has a different fudge factor, and the effect can be visualized by looking at the time domain of the impulse response of the resonance filters.

Moreover, if the impulse response were not excited by the residual from the original sample, the synthesized sound would not be so realistic; it is softer and more synthetic, as one can hear from the recordings in the Results section.

Conclusion

The handpan sound was successfully recreated with the modal synthesis technique using resonance filters, and a song was generated using the synthesized sound. It provides a simple yet efficient way to simulate impulse responses of objects. The model can be expanded to every handpan sound or other instrument. Then it can be fed into a computer program (such as Max/MSP, Ariduino IDE, etc.) to simulate a real handpan on a physical model. One example would be the Mini Handpan project I did last year for MUMT 306. Another implementation is to build a virtual model as it exists here. This virtual handpan is ideal for practice and choosing the desired scale before buying, as a physical handpan is expensive and heavy.

References

[1]: Morrison, Joseph Derek, and Jean-Marie Adrien. “MOSAIC: A Framework for Modal Synthesis.” Computer Music Journal 17, no. 1 (1993): 45–56. https://doi.org/10.2307/3680569.

[2]: Bilbao, Stefan. “Modal Synthesis”, in Numerical Sound Synthesis, https://ccrma.stanford.edu/~bilbao/booktop/node14.html, online book, 2006 edition, accessed 22 April 2022.

[3]: Scavone, Gary P. “Modal Synthesis”, in MUMT 307: Music Audio Computing II, https://www.music.mcgill.ca/~gary/307/week10/modal.html, online book, 2022 edition, accessed 22 April 2022.

[4]: Scavone, Gary P. “Digital ‘Resonators’”, in MUMT 307: Music Audio Computing II, http://www.music.mcgill.ca/~gary/307/week10/node4.html, online book, 2022 edition, accessed 22 April 2022.

[5]: PANArt. “Copyright: Questions and Answers”. Panart (en). Accessed 22 April 2022. https://panart.ch/en/articles/copyright-questions-and-answers.

[6]: Malzer, Clara. “The Magic of The Handpan: The Instrument For Stress-Release And Harmony”. The Conscious Club. Accessed 22 April 2022. https://theconsciousclub.com/articles/2019/10/24/the-magic-of-the-handpan-the-instrument-for-stress-release-and-harmonynbsp.

[7]: Rohner, Felix, and Sabina Schärer. “History, Development and Tuning of the Hang”, 2007. http://www.hangblog.org/panart/Paper-Hang-2007.pdf.

[9]: Wessel, David, Andrew Morrision, and Thomas D. Rossing. “SOUND OF THE HANG”. ACOUSTICS.ORG, 4 July 2008. https://acoustics.org/pressroom/httpdocs/155th/wessel.htm.

[10]: Rossing, Thomas D., Andrew Morrision, Uwe Hansen, Felix Rohner, and Sabina Schärer. “ACOUSTICS OF THE HANG: A Hand-Played Steel Instrument”, 2007. http://www.hangblog.org/panart/2-S2-4-IsmaRossing.pdf.

[11]: Morrison, Andrew, and Thomas D. Rossing. “The Extraordinary Sound of the Hang”. Physics Today 62, no. 3 (March 2009): 66–67. https://doi.org/10.1063/1.3099586.

[13]: Smith, J.O. “Resonator Bandwidth in Terms of Pole Radius”, in Introduction to Digital Filters with Audio Applications, https://ccrma.stanford.edu/~jos/fp/Resonator_Bandwidth_Terms_Pole.html, online book, 2007 edition, accessed 22 April 2022.

[14]: Smith, J.O. “Quadratic Interpolation of Spectral Peaks”, in Spectral Audio Signal Processing, https://ccrma.stanford.edu/~jos/sasp/Quadratic_Interpolation_Spectral_Peaks.html, online book, 2011 edition, accessed 22 April 2022.

Appendix

A. Sample MATLAB script of Modal Synthesis.

% A Matlab script to demonstrate modal synthesis, based on course materials

% from MUMT 307 at McGill University by Professor Gary P. Scavone.

clear all; close all; %clc

prompt = "The sound file to synthesize: ";

txt = input(prompt,"s");

fileLoc = 'sounds-original/';

ext = '.mp3';

if isempty(txt)

s_original = strcat(fileLoc,'s1-G3',ext);

else

s_original = strcat(fileLoc,txt,ext);

end

[y, fs] = audioread( s_original );

fs; % sampling rate = 44100 Hz

T = 1/fs; % sampling period

N = length(y); % number of samples in the sound file

Ttot = N./fs; % duration in seconds

t = 0: Ttot/N : Ttot-Ttot/N; % time sequence

figure(1);

subplot(3, 2, 1)

plot( t, y, 'k' )

xlabel('Time (s)');

ylabel('Amplitude');

title('original sound file');

grid on

N = 2^14; % length of FFT

iStart = 10^4; % starting sample of the FFT

sigIdx = iStart:N+iStart-1; % index of the FFT

Y = fft(y(sigIdx)); % FFT

subplot(3, 2, 2)

f = (0:N-1)*fs/N;

plot(f, 20*log10(abs(Y)))

xlim([0 5000/2])

grid

xlabel('Frequency (Hz)')

ylabel('Magnitude (dB)')

title('Frequency Spectrum');

%% find peaks of the spectrum using parabolic interpolation

% ref: https://ccrma.stanford.edu/~jos/sasp/Quadratic_Interpolation_Spectral_Peaks.html

Y = abs(Y(1:ceil(N/2))); % keep half of fft

Xi = [];

threshold = 10^(40/20); % dB converted to linear value

for n = 2:length(Y)-2 % search for peak indices

if Y(n+1) <= Y(n) && Y(n-1) < Y(n) && Y(n) > threshold

Xi = [Xi, n];

end

end

Yi = zeros( size( Xi ) );

for n = 1:length(Xi)

alpha = Y(Xi(n)-1);

beta = Y(Xi(n));

gamma = Y(Xi(n)+1);

Xii = 0.5 * (alpha - gamma) / (alpha - 2 * beta + gamma);

% interpolated bin value (-1/2 to +1/2)

Yi(n) = beta - 0.25 * (alpha - gamma) * Xii;

% interpolated peak value

if Xii > 0

Xi(n) = f(Xi(n)) + Xii*(f(Xi(n)+1)-f(Xi(n))); % bin value in Hz

else

Xi(n) = f(Xi(n)) + Xii*(f(Xi(n))-f(Xi(n)-1)); % bin value in Hz

end

end

K = length(Xi);

Xi = Xi(1:K);

Yi = Yi(1:K);

%% find -3dB Bandwidth

[Pxx,f2] = periodogram(y(sigIdx),rectwin(N),[],fs);

for i = 1:length(Xi)

figure(1)

xline(Xi(i))

fl = Xi(i)-10;

fr = Xi(i)+10;

bw(i) = powerbw(Pxx(f2>fl & f2 < fr), f2(f2>fl & f2 < fr));

end

bw;

factor = 0.6;

B = factor*bw;

%% IIR filter parameters

r = exp(-pi*B/fs);

b0 = Yi;

a1 = -2*r.*cos(2*pi*Xi/fs);

a2 = r.^2;

%% Create impulse response of filter bank

N = length(y);

x = [1; zeros(N-1, 1)];

y2 = filter( b0(1), [1 a1(1) a2(1)], x );

for n = 2:K

y2 = y2 + filter( b0(n), [1 a1(n) a2(n)], x );

end

y1 = y2 / max(abs(y2));

useResidual = true;

if useResidual

Y = fft(y);

Y2 = fft(y2);

R = Y ./Y2;

r = 50*real(ifft(R));

y2 = filter( b0(1), [1 a1(1) a2(1)], r );

for n = 2:K

y2 = y2 + filter( b0(n), [1 a1(n) a2(n)], r );

end

end

y2 = y2 / max(abs(y2));

%% play the sound - original, synthesized, and with residual

sound([y; zeros(10000, 1); y1; zeros(10000, 1); y2], fs)

N2 = length(y2); % number of samples in the sound file

Ttot2 = N2./fs; % duration in seconds

t2 = 0: Ttot2/N2 : Ttot2-Ttot2/N2; % time sequence

figure(1);

subplot(3, 2, 5)

plot(t, y)

hold on

plot( t2, y2, 'k' )

xlabel('Time (s)');

ylabel('Amplitude');

title('synthesized sound file - with residual');

grid on

Y2 = fft(y2);

Y2 = abs(Y2(1:ceil(N2/2)));

YdB2 = 20*log10(Y2);

freqs2 = (1:N2-1) * fs/N2;

freqs2 = freqs2(1:ceil(N2/2));

subplot(3, 2, 6)

plot(freqs2,YdB2)

xlim([0 2500])

xlabel('Frequency (Hz)');

ylabel('Magnitude (dB)');

title('Frequency Spectrum');

grid on

subplot(3, 2, 3)

plot(t, y)

hold on

plot( t2, y1, 'k' )

xlabel('Time (s)');

ylabel('Amplitude');

title('synthesized sound file');

grid on

Y1 = fft(y1);

Y1 = abs(Y1(1:ceil(N2/2)));

YdB1 = 20*log10(Y1);

freqs2 = (1:N2-1) * fs/N2;

freqs2 = freqs2(1:ceil(N2/2));

subplot(3, 2, 4)

plot(freqs2,YdB1)

xlim([0 2500])

xlabel('Frequency (Hz)');

ylabel('Magnitude (dB)');

title('Frequency Spectrum');

grid on

%% store the file

fileLoc2 = 'sounds-modalSynth/';

ext2 = '.wav';

noRes = '-noRes';

if isempty(txt)

s_new = strcat(fileLoc2,'s1-G3',ext2);

s_new1 = strcat(fileLoc2,'s1-G3',noRes,ext2);

else

s_new = strcat(fileLoc2,txt,ext2);

s_new1 = strcat(fileLoc2,txt,noRes,ext2);

end

audiowrite(s_new1, y1, fs)

audiowrite(s_new, y2, fs)